Other Ones and Zeros: quantum computing made probable

It would appear that one and zero are not enough. At the very least, their usual arrangement inside our classically designed computers is in for some serious revision. This November, the number of releases from researchers working on quantum computing spiked sharply. In all probability, it has more to do with scheduling and funding than the actions of some invisible force or supposed “hundredth monkey” effect. The theoretical work stretches back through decades into the dark recesses of cryptography and the woolier constructs of particle physics. It appears that the beginnings of the actual hardware are starting to take shape.

The blue bars in the timeline above represent the articles on quantum computing appearing at www.physorg.com

We haven’t quite hit the peak of computing power that the good old shoving- electrons-through-wires school provides. However, its limits are known to us. Before we reach the point where the only way to over-clock the ultimate game machine would be a superconductivity inducing bath in liquid hydrogen, there should exist a physical frame work for computing at the subatomic level. This is the zone at which quantum computing becomes more than just a theory.

A good place to start is with a recent a study published in Physical Review Letters by Dr, Sean D. Barrett and Dr. Thomas M. Stace. The short of it is that all proposed schemes for making a quantum computer are plagued with data loss from various sources. One of the biggest areas for loss are disappearing qubits. These are the basic building blocks of quantum computers, they are quite literally “quantum bits” which, through the use of superposition (the ability of a particle to manifest any of its possible states), can be arranged to make huge processing arrays in very small spaces. But how best to arrange these qubits with their inherent instability?

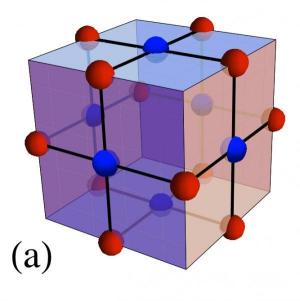

Barret and Stace propose that by setting the qubits in a lattice they can in effect reinforce data to correct for almost a 25% loss on information. As Dr. Barret puts it, “Just as you can often tell what a word says when there are a few missing letters, or you can get the gist of a conversation on a badly-connected phone line, we used this idea in our design for a quantum computer.” He then adds, “It’s surprising, because you wouldn’t expect that if you lost a quarter of the beads from an abacus that it would still be useful.” A quantum-strange way of doing enough with less so you could eventually do more. The source for this quote is from a very informative and concise write up on this study in Science Daily.

Illustration of the error correcting code used to demonstrate robustness to loss errors. Each dot represents a single qubit. The qubits are arranged on a lattice in such a way that the encoded information is robust to losing up to 25 percent of the qubits (Credit: Sean Barrett and Thomas Stace)

Illustration of the error correcting code used to demonstrate robustness to loss errors. Each dot represents a single qubit. The qubits are arranged on a lattice in such a way that the encoded information is robust to losing up to 25 percent of the qubits (Credit: Sean Barrett and Thomas Stace)

The quantum world zoo is strange place full of critters that “ain’t from around here” and the monkey house is called “Entanglement”. That a beam of light can be split in two and that operations performed on one of the beams should then be reflected in the other, now spatially separate, beam defies all reason. But that’s not reason enough for some very clever members of this planet’s top monkey troop to turn around and try to put this bizarre property to work. Quantum entanglement might one of the first areas in quantum computing to go public with schemes for quantum based computer memory and data transmission (I’m ignoring quantum based encryption for the moment because of its problematic relationship to “the public”-the military intelligence people have invested heavily here ) .

Back in January of this year at team of European scientists were able to demonstrate quantum entanglement working inside a solid state chip. This month, a group from Caltech released their work on creating a quantum state stored in four spatially distinct atomic memories while another group of researchers at the Niels Bohr Institute at the University of Copenhagen were able to store quantum information using two ‘entangled’ light beams. For a technology which is still experimental, 2010 could prove to be the year that various scientists around the world started to straighten out the entanglements and made real progress towards creating the next physical substrate for the future of computation. Or not: like artificial intelligence, fusion reactors, or long range manned space flight this could be a period of first glimmers and early successes before the obstacles become apparent and maybe insurmountable. Either, or, or maybe both we are talking about quantum physics here so any guess is just another wave function collapse, yes?

[…] This post was mentioned on Twitter by Doug Groves, rgbFilter. rgbFilter said: Other Ones and Zeros: quantum computing made probable: It would appear that one and zero are not enough. At the … http://bit.ly/dG80m5 […]