Smelling Light and Hearing Shapes

Sense and Sensibility 2.0

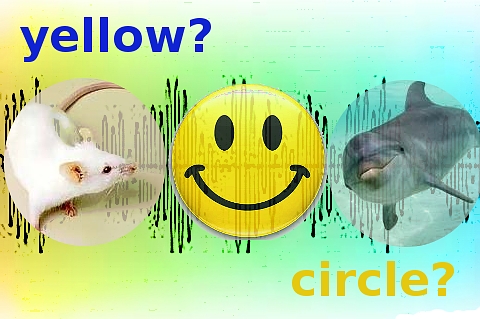

Synesthesia: the translation of one sensory mode into another, is no longer the domain of New Age mystics and lightshow designers. A deeper understanding of the neural structures underlying perception is creating very literal crossovers in how we think about the senses. With this, the possibility of a radical rewiring of how we experience the world is visible (tasteable, audible, touchable…).

Two recent items from Science Daily:

At Harvard neurobiologists are using optogenetically engineered mice that respond to light through their olfactory system; the sense of smell. More controllable as a stimulus than airborne odors, the scientists are using this technique to map out how the olfactory system reacts and to route its finer structures in the mammalian brain.

Prof.Venkatesh N. Murthy, the lead investigator explains…

“In order to tease apart how the brain perceives differences in odors, it seemed most reasonable to look at the patterns of activation in the brain, but it is hard to trace these patterns using olfactory stimuli, since odors are very diverse and often quite subtle. So we asked: What if we make the nose act like a retina?”

One can only wonder what the sensory world of Prof. Murthy’s subjects is like…Do optogenetically engineered mice dream of blue roses?

At McGill University, research is bringing to light our ability to detect shape by sound…

Shape is an inherent property of objects existing in both vision and touch but not sound. Researchers at The Neuro ( The Montreal Neurological Institute and Hospital) posed the question ‘can shape be represented by sound artificially?’ “The fact that a property of sound such as frequency can be used to convey shape information suggests that as long as the spatial relation is coded in a systematic way, shape can be preserved and made accessible — even if the medium via which space is coded is not spatial in its physical nature,” says Jung-Kyong Kim, PhD student in Dr. Robert Zatorre’s lab at The Neuro and lead investigator in the study.

In other words, similar to our ocean-dwelling dolphin cousins who use echolocation to explore their surroundings, our brains can be trained to recognize shapes represented by sound and the hope is that those with impaired vision could be trained to use this as a tool. In the study, blindfolded sighted participants were trained to recognize tactile spatial information using sounds mapped from abstract shapes. Following training, the individuals were able to match auditory input to tactually discerned shapes and showed generalization to new auditory-tactile or sound-touch pairings.

“We live in a world where we perceive objects using information available from multiple sensory inputs,” says Dr. Zatorre, neuroscientist at The Neuro and co-director of the International Laboratory for Brain Music and Sound Research. “On one hand, this organization leads to unique sense-specific percepts, such as colour in vision or pitch in hearing. On the other hand our perceptual system can integrate information present across different senses and generate a unified representation of an object. We can perceive a multisensory object as a single entity because we can detect equivalent attributes or patterns across different senses.” Neuroimaging studies have identified brain areas that integrate information coming from different senses — combining input from across the senses to create a complete and comprehensive picture.

[…] This post was mentioned on Twitter by Alex , rgbFilter. rgbFilter said: Smelling Light and Hearing Shapes: Sense and Sensibility 2.0 Synesthesia: the translation of one sensory mode into… http://bit.ly/at7Fq3 […]